|

The grib_filter option on https://nomads.ncep.noaa.gov is used to download

subsets of NCEP's forecasts. As the name suggests, the option works with grib (version 2)

files and is a filter. This option allows you to select regional subsets as well as level and variables.

As model resolution grows faster than bandwidth or the money to pay for

bandwidth, users will have to start using facilities such as grib_filter.

Grib_filter is an interactive facility to download subsets of the data, so it seems

unsuited to downloading more than a few files. However, we were tricky. Once you learn how

to download the data interactively, you click a button and generate a magic URL.

Once you have generated this magic URL, you give the URL to curl or wget to download

the data. Using scripting 101, you can write a script to download the data for other times and

forecast hours. Using cronjobs 101, you can run that script every day and get your daily forecasts

automatically.

All my examples will be using the bash shell and the curl program. The procedure

is simple, so many users have implemented the procedure in different

programming languages.

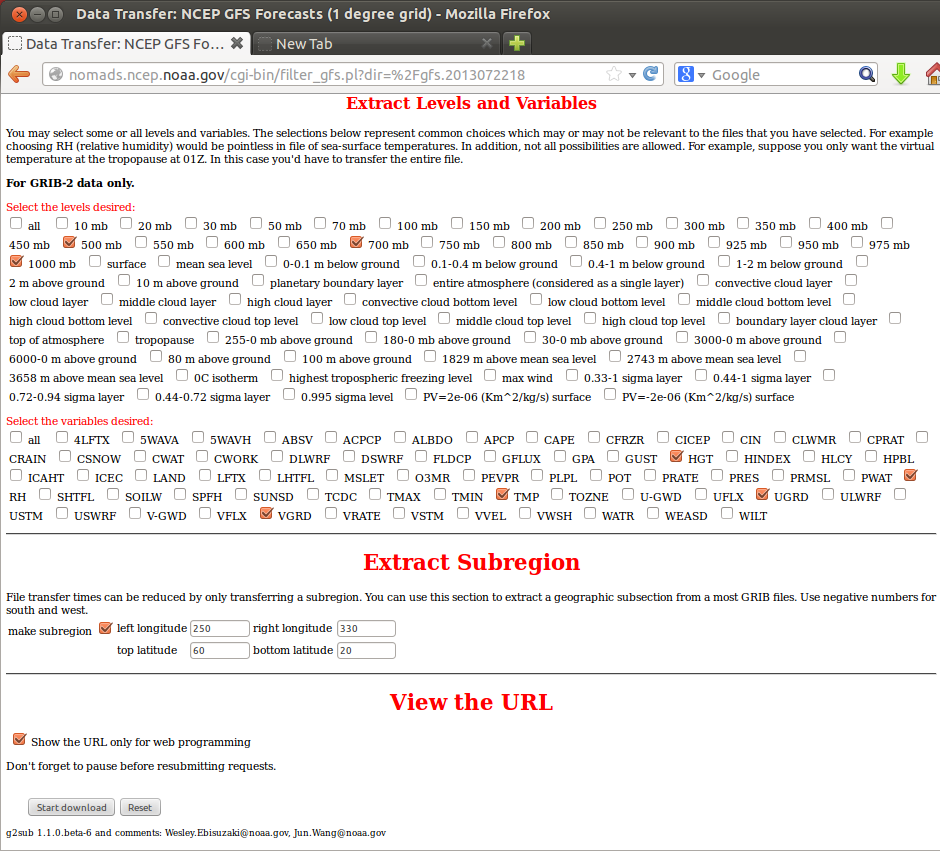

For my example, I am using the 1x1 GFS forecasts on https://nomads.ncep.noaa.gov. I selected directory

gfs.2013072218 and file gfs.t18.pgrbf00.grib2. Then I selected 3 levels:

500, 700 and 900 mb. 5 variables: HGT, RH, TMP, UGRD, VGRD. I enabled the

subregion option ("make subregion") and selected the domain 20N-60N, 250E-330E.

Finally I select the option "Show the URL only for web programming".

When I click on "Download", I get the magic URL:

URL=

https://nomads.ncep.noaa.gov/cgi-bin/filter_gfs.pl?file=gfs.t18z.pgrbf00.grib2&lev_500_mb=on&lev_700_mb=on&lev_1000_mb=on&var_HGT=on&var_RH=on&var_TMP=on&var_UGRD=on&var_VGRD=on&subregion=&leftlon=250&rightlon=330&toplat=60&bottomlat=20&dir=%2Fgfs.2013072218

If you study the above URL, you see the arguments start after the question mark and

are separated by ampersands (&). You may also notice that slashes have been

replaced by %2F. This is all standard stuff when working with URLs. If you look closely,

you see the name of the file being processed (file=gfs.t18z.pgrbf00.grib2) as well as the

directory (dir=%2Fgfs.2013082218). (%2F translates into a /.)

Here is a prototype script to "execute" the URL and download the file. In the script,

I've replaced the date codes and forecast hour with variables.

#!/bin/sh

#

# define URL

#

fhr=00

hr=18

date=20130821

URL="https://nomads.ncep.noaa.gov/cgi-bin/filter_gfs.pl?\

file=gfs.t${hr}z.pgrbf${fhr}.grib2&\

lev_500_mb=on&lev_700_mb=on&lev_1000_mb=on&\

var_HGT=on&var_RH=on&var_TMP=on&var_UGRD=on&\

var_VGRD=on&subregion=&leftlon=250&\

rightlon=330&toplat=60&bottomlat=20&\

dir=%2Fgfs.${date}${hr}"

# download file

curl "$URL" -o download.grb

# add a sleep to prevent a denial of service in case of missing file

sleep 1

I copied the above script into a file, changed the date code, chmod it to 755.

It ran. If it doesn't run, the error message will be in download.grb.

ebis@linux-landing:/tmp$ ./test.sh

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 71185 0 71185 0 0 660 0 --:--:-- 0:01:47 --:--:-- 2821

ebis@linux-landing:/tmp$ wgrib2 download.grb

1:0:d=2013082118:HGT:500 mb:anl:

2:6406:d=2013082118:TMP:500 mb:anl:

3:9906:d=2013082118:RH:500 mb:anl:

4:12991:d=2013082118:UGRD:500 mb:anl:

5:18567:d=2013082118:VGRD:500 mb:anl:

6:24143:d=2013082118:HGT:700 mb:anl:

7:30549:d=2013082118:TMP:700 mb:anl:

8:34049:d=2013082118:RH:700 mb:anl:

9:37134:d=2013082118:UGRD:700 mb:anl:

10:42295:d=2013082118:VGRD:700 mb:anl:

11:47456:d=2013082118:TMP:1000 mb:anl:

12:51372:d=2013082118:RH:1000 mb:anl:

13:54457:d=2013082118:UGRD:1000 mb:anl:

14:59618:d=2013082118:VGRD:1000 mb:anl:

15:64779:d=2013082118:HGT:1000 mb:anl

Converting the above script to download the required forecast hours,

run with the current date and to run as a cron job is scripting 101.

Have problems, ask your local scripting guru for help. Want to convert

the above bash script to Windows? I am sure it can be done and a Windows

guru can help.

Compatibility: as a design criteria, the new versions of grib_filter should

not break existing user scripts. The API should not change between versions

of grib_filter. However, the model will evolve over

time and so will the available fields. That can break user scripts.

Comments: the current grib-filter (1.1.0.beta-6) on nomads.ncep.noaa.gov was written

back in June 2008. The next version of grib-filter will allow interpolation

to different grids and extraction of values at lon-lat locations. The API will

be the same except that the variables and levels will have to be selected from

the menu. (This was always the case on the operational nomads but not

on the development nomdads.)

The screen capture is based on nomads 2013-08. Future screens may look different.

Questions?

The site, nomads.ncep.noaa.gov, is run by NCEP Central Operations (NCO).

Questions should be sent to NCO through the noamds help desk. Jun Wang

and Wesley Ebisuzaki were responsible for writing the original

grib_filter (6/2008) which NCO adapted for their nomads site.

Neither Jun nor Wesley are involved with nomads.ncep.noaa.gov.

Questions?

https://nomads.ncep.noaa.gov/txt_descriptions/Help_Desk_doc.shtml

- This fails: wget -O outputfile https://nomands.ncep.noaa.gov/(rest of line)

- Add quotes: wget -O outputfile "https://nomands.ncep.noaa.gov/(rest of line)"

- This fails: curl -o outputfile https://nomands.ncep.noaa.gov/(rest of line)

- Add quotes: curl -o outputfile "https://nomands.ncep.noaa.gov/(rest of line)"

- grib_filter returns the entire field without subsetting

- requested box is outside of the domain of the data field

- requested box is too small; the box does not enclose a grid point

- incorrect specification of the box, left longitude < right longitude

to go from left=10W to right=10E, use left=350 and right=370

to go from left=10E to right=10W, use left=10 and right=350

- grib_filter is not configured correctly (configuration problem)

- wgrib2 does not support the input grid type (configuration problem)

- Why isn't XYZ handled by grib_filter? The data is already on the site.

- Ask the nomads help desk.

- The subset takes more disk space than the non-subset grib

- Grib compression can be enabled in the configuration.

- Ask the nomads help desk to enable grib compression.

- The downloads are slow, the download session times out

- Ask the nomads help desk for help

- Break the request into two smaller requests

- The problem should be reduced when the input file goes from

the slow JPEG2000 compression to complex packing.

- Request that grib-filter handle multiple files.

- I don't think it is a good idea. You don't want a single

command that can access TBs of data (think a Reanalysis).

- Learn to script multiple requests.

- Why is grib_filter still beta? Is it going to change?

- 4/2016: The code hasn't been updated since it was first installed

back in 2008. I hear that NCO wants to change the code from perl

to php.

- Is the grib_filter source code available?

- I can't give you the 2008 fork that NCO is running.

- I can give you the development-nomads version of the code (2014).

- How does grib_filter work?

- Grib_filter is a perl script generates the web pages and calls wgrib2 to create the subset.

|